what is a network?

a network can be very small, like two computers connected by a cable in a home setup, or extremely large, like the internet, which connects devices all over the world.

networks can include things like phones, personal computers, servers, printers, security systems, and even wearable devices.

these devices communicate using different types of connections, such as wired cables, fiber optics, or wireless signals.

this section focuses on the basics of how networks work and how technicians support and maintain them.

network models

in networking, a topology is a way of describing how a network is organized and how its parts relate to each other.

to really understand a network, you need to look at it in two different ways: how it physically exists, and how it logically operates.

physical topology

physical topology refers to the tangible parts of a network. this includes computers, switches, routers, and the cables or wireless signals that connect them.

it's what you would see if you could lay the entire network out in front of you in our real world.

logical topology

logical topology focuses on how the network functions rather than how it looks. it describes how software controls connections, manages access, and allows data to move between devices.

this includes how users and applications gain access to the network and how resources like programs and data are shared.

if physical topology is the visible world of devices and cables, logical topology is the unseen layer. the “wired” space where information flows. basically, this is where lain exists ( ´ ꒳ ` ) ♡

this part introduces network models, which help explain how computers relate to each other on a network and how access to resources is managed.

later on, physical components and physical topologies are covered, but for now the focus is on how networks function at the software and operating system level.

controlling who can access network resources is handled by the operating systems used on the network. the operating system determines how users and programs connect and interact.

most networks follow one of two models: peer-to-peer or client-server.

a peer-to-peer model allows devices like desktops, laptops, tablets, or phones to share resources directly with each other.

the client-server model relies on a dedicated system that manages access for the entire network. this typically requires a network operating system that controls users, permissions, and shared resources.

examples of NOS include server-based versions of windows and linux distributions designed specifically for managing networks:

peer-to-peer network model

in a peer-to-peer (p2p) network, each computer is responsible for managing access to its own resources. there is no central system controlling the network.

the computers on the network (often called nodes or hosts) form a group that shares resources directly with one another. each device handles its own users, security settings, and shared files.

p2p networks can use many common operating systems, such as windows, linux, macos, or chrome os on desktops and laptops, and ios or android on mobile devices.

devices in a p2p network share resources using built-in file sharing features or user accounts. many operating systems can share files with other devices even if they are running different operating systems.

in some setups, each computer keeps its own list of users and permissions. access to files and folders is based on these local settings, which can become difficult to manage as the network grows.

p2p networks are generally best for small environments, usually fewer than about 15 computers.

advantages of peer-to-peer networks

- simple to set up and configure

- useful when time or technical expertise is limited

- usually less expensive than networks that require a dedicated server

disadvantages of peer-to-peer networks

- not scalable. managing the network becomes harder as more devices are added

- security can be weak in simple setups, making shared data easier to access without proper authorization

- not practical for managing many users or shared resources

- difficult to maintain a centralized server using only peer-to-peer file sharing

client-server network model

in a client-server network, access to shared resources is controlled by a central system rather than by individual computers.

when windows server manages sign-ins and permissions for a group of computers, that logical group is called a domain

the centralized database that stores user accounts and security information is called ad (active directory)

each user is assigned a domain-level account by a network administrator, and access to network resources is controlled through ad ds (active directory domain services)

a user can sign in from any computer on the network and access resources based on the permissions assigned to their account.

in this model, a device making a request for data or services is called the client clients can still run applications locally and store files on their own devices, but shared resources are controlled centrally.

what the network operating system (nos) handles

- managing shared data and other network resources

- ensuring only authorized users can access the network

- controlling which files and resources users can read or modify

- restricting when and from where users can sign in

- defining the rules computers use to communicate

- in some cases, delivering applications or shared files to clients

because servers must handle requests from many clients at the same time, they usually require more processing power, memory, storage, and network bandwidth than client machines.

for reliability, servers may use raid, which helps protect data if a drive fails.

advantages of client-server networks

- user accounts and passwords are managed in one central location

- access to shared resources can be assigned to individual users or groups

- network issues can often be monitored and resolved from one place

- more scalable than peer-to-peer networks

network services

networks provide more than just connections between devices. they also make resources available, such as applications and the data those applications use.

in addition to shared resources, networks rely on services that handle how devices connect, communicate, and exchange information with each other.

together, these shared resources and supporting functions are referred to as network services

this section focuses on common network services that are typically present on most networks.

network services usually involve at least two endpoint devices. one device requests data or a service, and another device provides it.

the requesting device is known as the client, while the device supplying the resource or service is the server.

for example, when someone uses a web browser to load a webpage, the browser sends a request to a web server, which responds by sending the requested data back.

the client and server do not need to be on the same local network. they can communicate across multiple connected networks, such as through the internet.

web browsing is a basic example of a client requesting a service from a server, even when they're far apart.

network protocols

devices communicate using shared rules called protocols. these rules define how data is requested, sent, and understood.

think of it like speaking german to someone who only speaks vietnamese. both languages are real languages, but without a shared language, nothing makes sense.

most modern networks rely on the tcp/ip protocol suite to support communication across local networks and the internet.

you can think of tcp/ip as the common language the internet agrees on. without it, devices wouldn't know how to send or receive information.

web services

web traffic typically uses http. when encryption is added, the protocol becomes https (http secure)

the application layer is the part of networking that users actually interact with. if you can see it, click it, type into it, or read it, like loading a website, sending an email, or downloading a file, it lives here. lower layers handle things like cables, signals, and data delivery. the application layer focuses on what the user is trying to do.

secure web communication relies on encryption protocols such as ssl and tls

many popular web servers are open source

email services

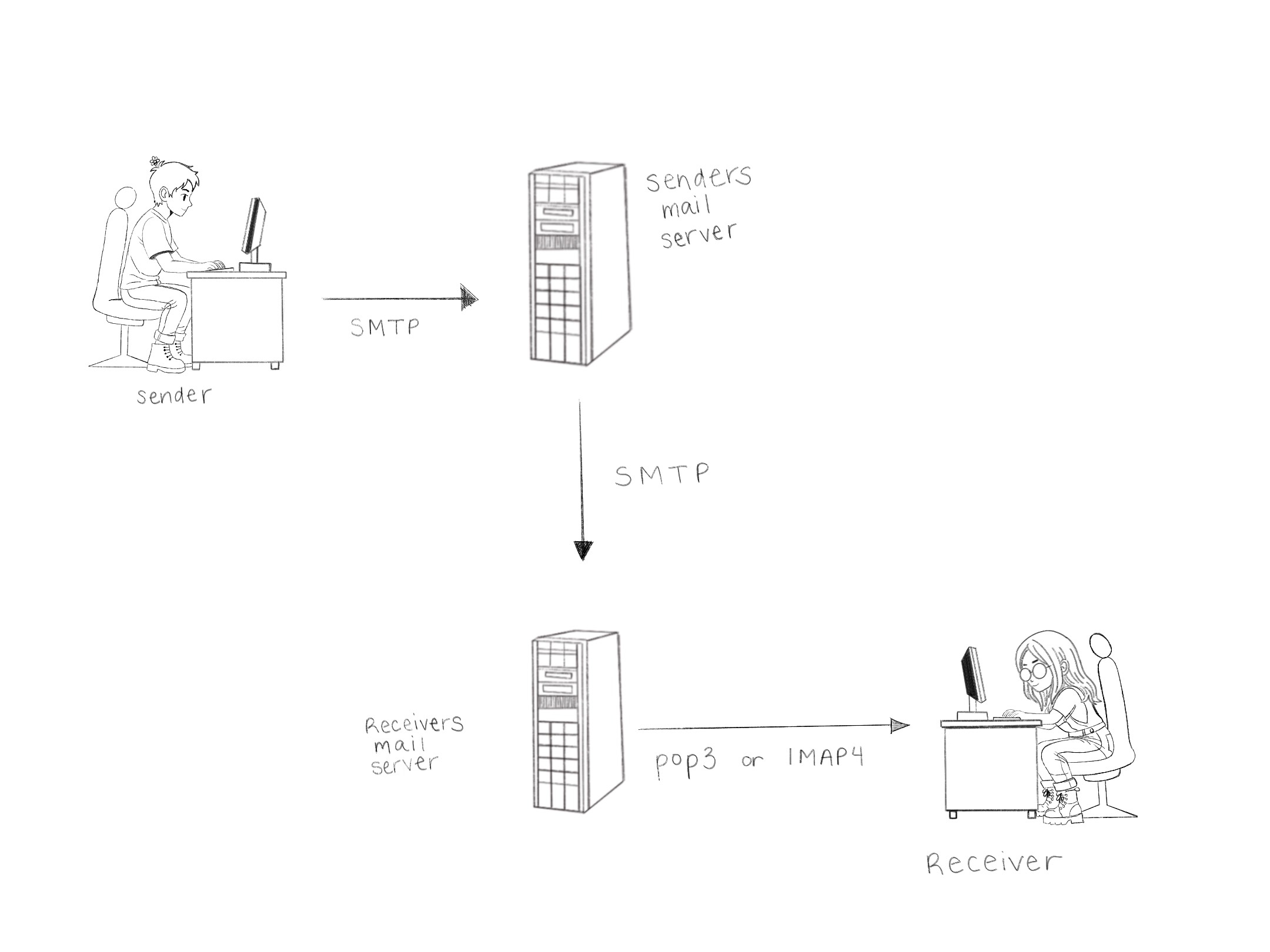

email uses different protocols depending on whether a message is being sent or received.

smtp sends mail from the client to the sender's mail server and then to the recipient's mail server. once the message arrives, it stays on the recipient's server until the user checks their email.

- pop3 downloads messages to the client and usually removes them from the server.

- imap4 keeps messages on the server so they can be accessed from multiple devices.

both pop3 and imap4 can use ssl or tls for security. email servers like microsoft exchange server manage email, while clients such as outlook or browser-based apps like gmail allow users to read and send messages.

dns service

dns works like a contacts list for the internet. instead of remembering long ip addresses, we use easy names like google.com, and dns looks up the correct address for us.

companies, internet providers, and public services like google or cloudflare run dns servers so devices can find websites and network resources quickly and reliably.

file transfer services

ftp is a service used to move files between two computers on a network.

regular ftp does not encrypt data, which makes it insecure, but when encryption is added using ssl/tls it becomes ftps, which is much safer.

while web browsers can act as basic ftp clients, dedicated ftp programs offer more features and better control for file transfers.

remote access services

some network protocols let a technician remote in, meaning they can access and control another computer from their own device.

telnet is an older tool that allows command-line access, but it does not encrypt data, which makes it insecure. because of this, it has mostly been replaced by more secure options.

ssh is commonly used on linux systems and creates an encrypted connection between two computers, while rdp is used on windows to securely access another computer's desktop.

these tools are often used when a vendor needs to connect to a company computer to troubleshoot software, where the vendor's computer acts as the client and the company computer is the host.